A structured approach to writing reliable software by progressing and ascending through three distinct levels of quality assurance.

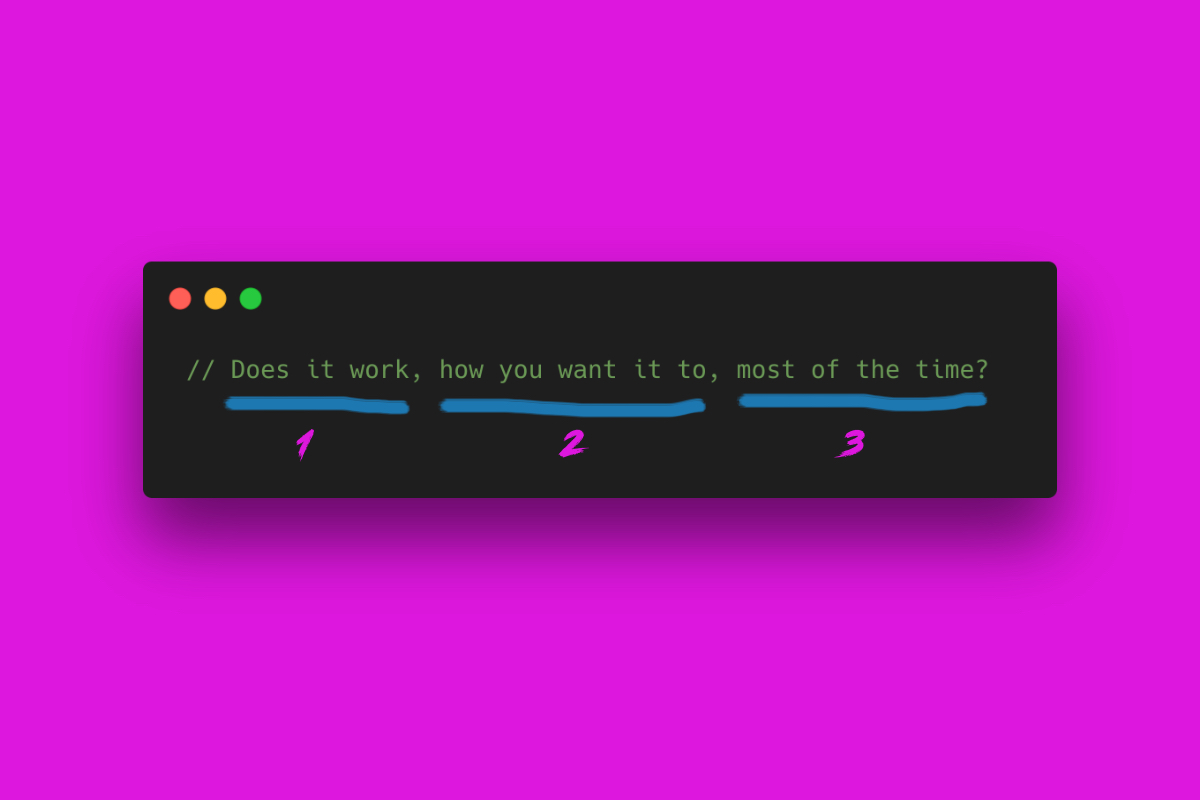

A software development quote I love is "Make It Work Make It Right Make It Fast" - to build on this pillar, I want to delve into my own personal mantra I employ when I've come up with a solution to a software problem. I ask myself: "Does it work, how you want it to, most of the time?"

Writing quality software isn't magic—it often follows a clear, linear progression. If you've ever felt overwhelmed by testing, debugging, or making your code production-ready, it helps to create a mental model and break the problem down into three fundamental questions:

- Does it work? (Basic syntax and compilation)

- Does it work how you want it to? (Integration tests and expected behavior)

- Does it work most of the time? (Production reliability, resilience, and fault tolerance)

Each stage represents a necessary milestone in writing reliable software. Let's walk through them one by one.

1. Does it work? (Basic syntax and compilation)

The first step in any software project is ensuring the code actually runs. At this level, the concerns are rudimentary:

- Syntax errors: Typos, missing brackets, misplaced commas—basic mistakes that prevent compilation or execution.

- Runtime errors: Calling undefined variables, passing the wrong arguments, or referencing properties that don't exist.

- Static analysis: Linters and type checkers (TypeScript, ESLint) that help catch obvious issues before running the code.

Example: Basic Syntax Errors

Consider a simple function that fetches user data:

const fetchUser = async (id) => {

const response = await fetch(`https://api.example.com/users/${id}`);

return response.json();

};This code looks fine, but if we call fetchUser() without an id, or if fetch fails, it could break. A simple syntax check won't catch logical issues, but at least it ensures the code runs.

2. Does it work how you want it to? (Integration and Expected Behavior)

Once the code executes without syntax errors, the next level ensures it behaves correctly. This involves:

- Unit tests: Does each function return the expected output?

- Integration tests: Do multiple components work together as expected?

- Edge cases: Does the code handle unexpected input or errors gracefully?

Example: Adding Tests

Let's improve fetchUser by validating input and handling errors:

const fetchUser = async (id) => {

if (!id) throw new Error("User ID is required");

try {

const response = await fetch(`https://api.example.com/users/${id}`);

if (!response.ok) throw new Error("Failed to fetch user");

return response.json();

} catch (error) {

console.error("Error fetching user:", error);

return null;

}

};Now, we can write tests:

test("fetchUser should return user data", async () => {

global.fetch = jest.fn(() =>

Promise.resolve({

ok: true,

json: () => Promise.resolve({ id: 1, name: "Alice" }),

})

);

const user = await fetchUser(1);

expect(user).toEqual({ id: 1, name: "Alice" });

});

test("fetchUser should handle missing ID", async () => {

await expect(fetchUser()).rejects.toThrow("User ID is required");

});This step ensures the function behaves as expected in normal scenarios, but we're still not testing it under real-world conditions.

3. Does it work most of the time? (Production-Grade Reliability)

Even if code passes tests, real-world failures can still occur. Production-ready software requires additional measures:

- Redundancy: Can we retry failed requests?

- Resilience: Can it handle network failures, high load, or unexpected inputs?

- Idempotency: Will it behave consistently if retried?

- Observability: Are logs and metrics available for debugging?

- Transactional safety: Does it avoid partial failures?

These questions formed the basis of my post on Chaos Engineering: Jurassic Park & Distributed Systems about embracing failure as an inevitability, not a possibility - and to build fail-safes in from the start.

Example: Making fetchUser More Resilient

To improve reliability, we can add retries and logging:

const fetchUser = async (id, retries = 3) => {

if (!id) throw new Error("User ID is required");

for (let attempt = 1; attempt <= retries; attempt++) {

try {

const response = await fetch(`https://api.example.com/users/${id}`);

if (!response.ok) throw new Error("Failed to fetch user");

return await response.json();

} catch (error) {

console.warn(`Attempt ${attempt} failed:`, error);

if (attempt === retries) throw error;

}

}

};In production, we might also:

- Implement circuit breakers to stop excessive retries.

- Use transactional guarantees if modifying data.

- Deploy observability tools like structured logs and tracing.

- Design for graceful degradation if dependent services go down.

Conclusion

Quality software isn't built overnight. It progresses through three key stages:

- Does it work? (Basic syntax and execution checks)

- Does it work how you want it to? (Behavioral tests and edge cases)

- Does it work most of the time? (Resilience, reliability, and production hardening)

By approaching software development in this structured way, you can write code that not only works but also withstands real-world challenges. It's the difference between a script that runs on your machine and software that reliably powers a business.

Next time you write code, ask yourself: Does it work, how you want it to, most of the time?