Introduction - The Accidental Era of "AI First"

Over the last two years, you've probably noticed a strange vibe in the software world: everyone is moving at impossible speed while simultaneously questioning if any of this will matter in five years.

We've crossed into a world where individuals can do what once required teams. A world where "engineering" sometimes means "describe the system you want and let an agent scaffold 70% of it." A world where the differentiator is no longer what you can build, but how well you understand and present what you're building.

This post is about that gap - not the gap between good engineers and bad engineers, but the widening gap between what AI can do and what humans still uniquely bring to the table. In 2025, the superpower isn't AI; it's AI plus a human who actually understands the problem...and understands humans.

What Brains Do vs. What AI Does

If you strip the biology away, a brain is three things:

- A prediction engine - constantly guessing what happens next based off of blending past experiences and the current situation.

- A context machine - soaking the world in messy, multimodal experience.

- A fear-driven risk assessor - optimised not for efficiency, but for survival. The brain doesn't distinguish "good" or "bad", nor between "positive" or "negative", simply "safety" and "uncertainty". It's why people often opt for staying in a "familiar hell" vs. the risk of a "new heaven".

AI, ironically, is the inverse:

- A prediction engine - but trained on tokens, not lived moments.

- A context simulator - but only through text, images, embeddings, and approximations.

- No fear at all - and therefore no instinctive understanding of consequences. It can analyse code paths for execution, but can it remember that time it stayed up until 3am fixing production? Those moments get embedded and baked into your nervous system, propelling you to never to repeat the same experience.

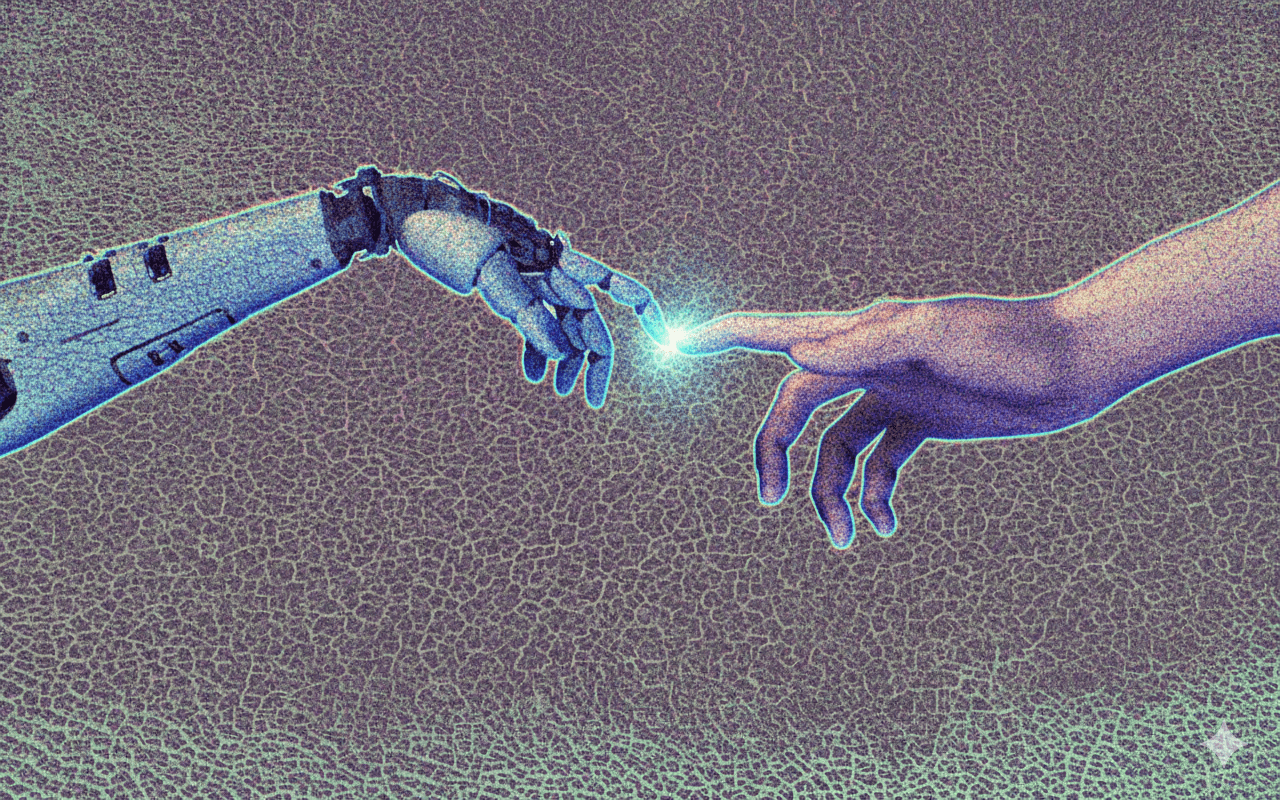

Humans have groundedness - the smell of the Sistine Chapel, the weight of a friend's head on your lap, the lived experience that can't be compressed into embeddings. AI has a kind of alien competence - breadth without personal cost, pattern recognition without pain. Where they meet is where the magic happens, but pretending they are the same thing is where developers go wrong.

The Good Will Hunting Principle

Why AI Will Never Know What the Sistine Chapel Smells Like

There's a moment in Good Will Hunting that has become, for me, the perfect metaphor for AI:

"If I asked you about art you could give me the skinny on every art book ever written… But you couldn't tell me what it smells like in the Sistine Chapel..."

AI is the bench scene. It is Will: dazzlingly capable, encyclopaedic, high-bandwidth, sometimes correct before it's even finished reading the prompt.

Humans are Sean. We carry the texture of life - loss, fear, joy, consequence. AI knows the syllabus. Humans know the stakes. This is why AI alone isn't the answer, especially in domains that matter. AI is an accelerant. The human is the combustion chamber. If you're singing off the same hymn sheet with AI, it can decipher the strange glyphs into what it should sound like, but it cannot feel the swelling vibration in your chest you associate with a choral climax.

Determining Determinism Non-Deterministically

How to Know When an AI Is "Correct" When It Cannot Be Repeated Exactly

Humans secretly want AI to behave like traditional software: given X, produce Y, every time. But AI behaves like a human who took three espressos and read the entire internet: given X, produce Y, Z, or occasionally something that should be set on fire, each delivered with an earnest and confident "I've done it! Here's what I can do next!".

So how do you measure correctness in a non-deterministic system? You don't evaluate the final output; you evaluate the space of outputs:

- Is it within the acceptable region?

- Is the variance meaningful?

- Is the deviation dangerous or merely creative?

- Would a domain expert disagree? And if so, why? How do we feed that domain expert feedback back into the initial loop, thus improving the overall process.

Correctness becomes a spectrum. Safety becomes a gradient. Evaluation becomes probabilistic. This is why human involvement matters - not as an afterthought, but as a co-author of the output distribution.

The Human-in-the-Loop Advantage - Lessons From WorkMade

At WorkMade, I worked closely with our in-house tax expert - a man with 20+ years of experience reading IRS forms like other people read cereal boxes.

We built systems that:

- Automatically classified user transactions into tax codes.

- Prepared IRS forms programmatically.

- Used AI to infer categories, reduce manual work, and accelerate decision-making.

But here's the important part: we never once assumed the AI was "right." Instead, we assumed it was fast. We built:

- Escape hatches.

- Review checkpoints.

- Overrides.

- Confidence thresholds.

- Explanations.

- Human re-routing mechanisms.

- Continuous correction loops guided by the tax expert.

AI did the brute-force first pass. Our expert handled the nuance. The system didn't replace expertise; it scaled it. That's the pattern teams miss: AI doesn't eliminate the human. AI amplifies the human - if the system is designed correctly. The human is the core logic, AI is the serverless lambdas you scale horizontally to maximise throughput.

The Amygdala Architect - How Fear Becomes a Feature

People sometimes assume great architecture comes from calm, serene minds.

Some of the best engineers I've met - and, if I'm honest, my best work too - has come from a kind of wired, hyper-attentive intensity. The kind that doesn't choose to analyse the edge case; it can't stop analysing it.

My overactive amygdala means I'm constantly scanning, anticipating, modelling. It's brilliant for building systems. It's exhausting for being a person. Almost certainly exhausting for my wife too...

This intensity is a blessing and a curse.

The Blessing

It gives you the "architect brain":

- You see the failure modes before anyone else even sees the system.

- You instinctively build in escape valves, retries, and guard rails.

- You predict downstream consequences with eerie accuracy.

- You design for resilience because your nervous system doesn't trust anything that can't survive an outage.

- You assume things will break - and then design systems that survive that assumption.

This isn't pessimism. It's protective pattern-recognition.

It's the same biological wiring that kept your ancestors alive, repurposed for distributed systems.

The Curse

But that same intensity doesn't switch off just because the meeting ends or the PR is merged.

It means:

- Your brain keeps simulating failure in everyday life.

- You obsess over unlikely risks with the same seriousness as likely ones.

- Your nervous system sits closer to "alert" than "rest."

- You carry architectural vigilance into places where it doesn't belong.

It's the classic engineer's paradox:

The things that make you great at your craft often make life feel heavier than it needs to be.

And yet - and this is important - when directed correctly, that same intensity becomes your differentiator in an AI-first world.

AI is calm. AI is neutral. AI has no instinct for danger and no felt sense of consequence. AI cannot be paranoid - and paranoia, in the right dose, is foresight.

Your intensity isn't an inconvenience. It's the complementary ingredient AI lacks:

- Gut-level modelling of risk

- Emotional simulation of failure

- Embodied understanding of consequences

- An almost spiritual commitment to "this must not break"

If AI is acceleration, intensity is directional caution.

Together, they create systems that are fast and safe - and that combination is becoming rarer, and therefore more valuable.

- I plan for failure paths before success paths.

- I ask "what if this breaks?" before "what if this works?"

- I model worst-case scenarios instinctively.

- I design with escape valves, backpressure, retries, and dead-letter queues by default.

Fear - in the right container - is foresight. AI cannot feel fear. It cannot anticipate catastrophic edge cases from internal anxiety. It cannot emotionally simulate "what happens if this fails and thousands of people rely on it." But humans can. That is not a bug; it is the defining complement to AI's strengths.

How Developers Should Use AI in 2025

AI is no longer "autocomplete but bigger." It is now a junior engineer, a research assistant, a prototyper, a debugger, a pair programmer, a brainstorming partner, and a brute-force executor of detail. But the developer remains indispensable for:

- Problem definition - AI can solve almost anything; it cannot choose which thing is worth solving.

- Context building - grounding the problem in reality, not tokens.

- Risk modelling - thinking through failure modes AI cannot intuitively imagine.

- Domain alignment - translating expertise into constraints.

- Decision ownership - choosing what is "good enough," what is "dangerous," and what is "wrong."

- Ethical navigation - determining where automation stops, where it's irresponsible to let AI continue, and where a human is required for care.

AI accelerates execution. Humans determine direction.

The Future - Hybrid Intelligence as a Competitive Advantage

The founders who win in this new era won't be the ones who automate everything. They'll be the ones who:

- Understand the problem viscerally.

- Use AI as leverage, not outsourcing.

- Build human fallibility into the system.

- Build human wisdom into the loop.

- Recognise where AI ends and where experience begins.

- Treat the amygdala not as a glitch but as a strategic asset.

AI is the engine. Humans are the steering wheel. You need both to get anywhere worth going.

Closing - The Bench Scene Revisited

In Good Will Hunting, Sean tells Will a truth AI will never understand:

Experience is not data. Suffering is not a dataset. Love is not an embedding. Fear is not a token. Wisdom cannot be scraped from Wikipedia.

AI is brilliant. But it has never smelled the Sistine Chapel. The future will belong to the builders who remember that.